Countdown to compliance: Australia’s social media age ban

16 Nov 2025

Australia is in the midst of rolling out one of the most ambitious child online safety reforms in the world: a ban on social media access for children under 16.

The law applies to what is defined as “age-restricted” social media platforms which meet all of the following criteria:

- sole purpose, or a significant purpose, of the service is to enable online social interaction between two or more end-users;

- service allows end-users to link to, or interact with, some or all of the other end-users;

- service allows end-users to post material on the service.

Platforms whose sole or primary purpose is to provide messaging, email, voice or video calling, online gaming, product or service reviews and advice, professional networking or development, or to support education or health have been excluded under the legislative rules published by the Minister for Communication in July 2025. However, the eSafety Commissioner has cautioned that messaging services with social-media-style features may still fall within scope.

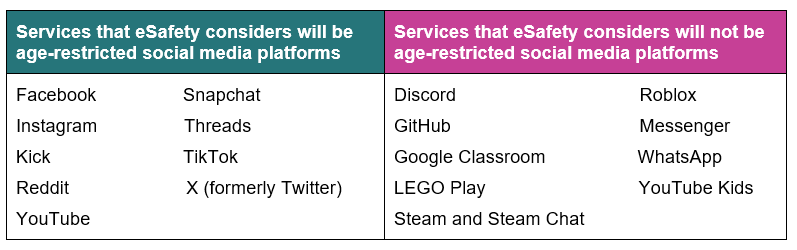

The eSafety Commissioner confirmed on 5 November 2025 its view on the scope of platforms covered by the ban:

Scheduled to take effect next month, social media platforms operating in Australia have only weeks left to develop and roll out systems to enforce age restrictions. The reform has sparked debate not only about its feasibility but also about its implications for privacy, surveillance, and digital rights.

What The Law Covers

The Online Safety Amendment (Social Media Minimum Age) Bill 2024 passed by the Australian Government in December 2024 amends the Online Safety Act 2021. The Social Media Minimum Age (SMMA) scheme was framed as part of the federal government’s child safety agenda, aimed at reducing exposure to harmful online content, cyberbullying, and grooming.

In September 2025, eSafety published regulatory guidance detailing what ‘reasonable steps’ age-restricted social media platforms must take to prevent age-restricted users from having accounts, including guiding principles for the implementation of age assurance to meet SMMA obligations. Importantly, the eSafety Commissioner has emphasised that the legislation is not a ban, but rather a delay to account eligibility. Whilst under-age users and parents will not face penalties, platforms may be fined up to 30,000 civil penalty units if they fail to take reasonable steps to prevent access.

In October 2025, the Office of the Australian Information Commissioner (OAIC) also published regulatory guidance in line with the SMMA scheme; emphasising that entities must be aware that the additional privacy obligations under the SMMA scheme take effect alongside the Privacy Act 1988 (Privacy Act) and the Australian Privacy Principles.

“The OAIC is committed to ensuring the successful rollout of the SMMA regime by robustly applying and regulating the privacy rules contained in the legislation, in order to reassure the Australian community that their privacy is protected,” said Privacy Commissioner Carly Kind.

The Trial That Will Shape Enforcement

Central to compliance is the adoption of age assurance mechanisms - technologies that can verify or estimate a user’s age with a degree of reliability sufficient to meet regulatory expectations. To support implementation, the federal government commissioned a consortium headed by the world-leading Age Check Certification Scheme (ACCS) to conduct an Age Assurance Technology Trial.

ACCS, a UK based conformity assessment body for age assurance technologies, was tasked with providing a final report to the Australian Government by mid 2025 examining age verification, age estimation, age inference, parental certification or controls, technology stack deployments and technology readiness assessments in the Australian context.

The trial’s objectives included assessing the reliability of these tools, evaluating their privacy and security impacts, and identifying implementation challenges for industry. That is, the trial aimed to find an effective balance between blocking under age users from creating accounts on restricted platforms whilst safeguarding Australians’ privacy, data security, and freedom of expression.

Transparency Under Fire

In early August 2025, the trial attracted attention following the resignation of two members of the stakeholder advisory board for the trial. The board – comprising of tech company leaders, child safety advocates, academics and privacy advocates – was established to provide independent oversight and diverse input for the trial.

The resignations were linked to concerns about transparency and whether privacy risks were adequately interrogated. Guardian Australia reported that John Pane, chair of Electronic Frontiers Australia and former advisory board member for the trial, was concerned the trial had drifted towards a “tick-box” privacy compliance exercise for vendors.

The final report - comprising of 10 volumes and individual assessments of each age assurance method evaluated - was submitted to the Communications Minister on 1 August 2025 and released to the public on 31 August 2025.

Privacy vs Protection: The Balancing Act Ahead

At the heart of the issue is trust. Age assurance technologies - particularly those involving facial scanning or large-scale data collection - pose significant risks if not subject to independent, transparent oversight. There is a risk that Australia could end up normalising intrusive surveillance for all internet users, not just children.

For parents and young people, and realistically all Australians, the concern is twofold:

- Effectiveness – can these age assurance technologies truly prevent determined under aged users from bypassing restrictions?

- Safety – will the verification process itself expose users to greater privacy risks than the harms it is designed to prevent?

From a compliance perspective, several considerations arise:

- Proportionality and necessity – how will regulators assess whether chosen technologies meet the requirement of being a “reasonable step”, and how will proportionality be evaluated where privacy risks are significant?

- Data protection – age assurance often requires the collection or processing of biometric or identity information – personal and sensitive information for the purposes of the Privacy Act. Governance around retention, secondary use, and security will be critical.

- Transparency – both service providers and regulators face the challenge of demonstrating transparency in how solutions are selected and applied, without compromising security or enabling circumvention.

- International alignment – similar debates are underway in the UK, EU, and US. Australia’s framework could influence other countries if the design, monitoring, and enforcement of the regime proves effective and viable..

Whether Australia can deliver a system that both protects children online and respects the privacy of all internet users remains an open question - and one with international significance. Other jurisdictions, from the UK to the US, are closely watching Australia’s progress as they grapple with their own child online safety debates.

This article was written by Ariel Bastian, Senior Associate Corporate Commercial and Anna Kosterich, Restricted Practitioner Corporate Commercial.